French immigrants are eating our pets!

You have never seen the all elusive catsnail? Bummer you should look harder.

It’s a snat. They are not easy to catch, because they are fast. Also, they never land on their shell.

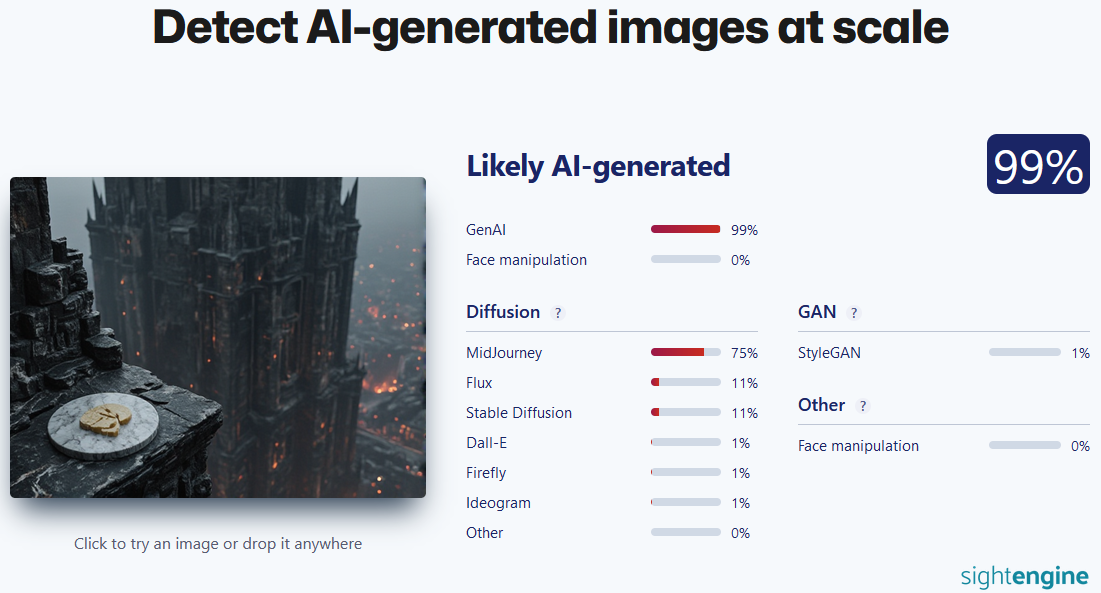

honestly, its pretty good, and it still works if I use a lower resolution screenshot without metadata (I haven’t tried adding noise, or overlaying something else but those might break it). This is pixelwave, not midjourney though.

That is a weird looking rabbit

I don’t get it. Maybe it’s right? Maybe a human made this?

The picture doesn’t have to be “real”, it just has to be non-AI. Maybe this was made in Blender and Photoshop or something.

Check the snail house. The swirl has two endings. Definitely AI

deleted by creator

deleted by creator

Deleted by creator

created by deletor

Or maybe your expectations from ai detection are too high.

That’s a normal housecat. Not sure what people are confused about

I wanted to get a cat but I discovered I was allergic to the slime trail.

Miao

I mean, it could be a manual photoshop job. Just because it’s not AI doesn’t mean it’s real.

But also the detector is probably wrong - it’s likely an AI image using a different model than the detector was trained to detect.

I mean, it could be a manual photoshop job.

It could, but the double spiral in the shell indicates AI to me. Snail shells don’t grow like that. If it was a manual job, they would have used a picture of a real shell.

Edit: plus the cat head looks weird where it connects to the head, and the markings don’t look right to me.

Agreed. The aggressive depth of field is another smoking gun that usually indicates an AI image.

Also the fact that the grain on the side of the shell is perpendicular to the grain on the top, and it changes where the cat ear comes up in front of it.

Very telltale sign of AI is a change of pattern in something when a foreground object splits it.

Not saying it’s always a guarantee, but it’s a common quirk and it’s pretty easy to identify.

I can tell from some of the pixels and from seeing quite a few shops in my time.

Your reference is ancient and dusty and it makes me feel old. Stop it.

Snail shells don’t grow like that but this is clearly a snat, not a snail.

Even cnailshells would have to adhere to the basic laws of conchology though

There were a lot of really good images like that well before AI. Anyone remember Photoshop Friday?

The shell looks ai generated though, if it was photoshopped it would’ve been a snail shell used for the source image.

There’s a sort of… Sheen, to a lot AI images. Obviously you can prompt this away if you know what you’re doing, but its developing a bit of a look to my eye when people don’t do that.

They’re often too smooth.

Can we bring that back?

…you know people made fake pictures before image generation, right?

They made fake pictures before computers existed too.

This obviously can’t be true, how did they do it without Photoshop? /s

I mean, optical illusions have been used to fool audiences for years.

https://youtube.com/shorts/bsyRnmdceqQ?si=SfUM2mssqc1sjvNt

This is a motion picture of course but the idea is “faking” an image isn’t too far off.

Hehe, I know, I’m just being silly - the /s on my message means it’s in a sarcastic tone :) but thanks for taking the time to share that video!

I’m an idiot, thank you for the explanation, I got got

I’ve seen the cave paintings deer and horses everywhere but when I look around nothing but rocks and trees.

Ignorant Americans, never even heard of the common snailcat

Where the fuck are you from that they aren’t called catsnails? Odd. Been catsnails here since I can remember.

“We investigated ourselves and found nothing wrong.”

Back2thefuture_iknowthisone.png

That’s a song by the police, right?

Iseewhatyoudidthere.jpg

LOL

cute snat…

That’s clearly a cail

Are we all looking at the same snussy?

We get them a lot around here. They don’t make for good pets, but they keep the borogoves at bay.

Which is great, honestly. Borogoves themselves are fine, but it’s not worth the risk letting them get all mimsy.

There are a bunch of reasons why this could happen. First, it’s possible to “attack” some simpler image classification models; if you get a large enough sample of their outputs, you can mathematically derive a way to process any image such that it won’t be correctly identified. There have also been reports that even simpler processing, such as blending a real photo of a wall with a synthetic image at very low percent, can trip up detectors that haven’t been trained to be more discerning. But it’s all in how you construct the training dataset, and I don’t think any of this is a good enough reason to give up on using machine learning for synthetic media detection in general; in fact this example gives me the idea of using autogenerated captions as an additional input to the classification model. The challenge there, as in general, is trying to keep such a model from assuming that all anime is synthetic, since “AI artists” seem to be overly focused on anime and related styles…

No spooky eyes, no extra limbs, no eery smile? - 100% real, genuine photograph! 👍